📘 Atenia Engine

Technical Whitepaper

Execution intelligence for AI systems that operate in the real world.

🧠 Executive Technical Summary

Modern AI systems increasingly fail not because models are incorrect, but because execution assumptions no longer hold in real-world environments. While model architectures, training methods, and optimization techniques have advanced rapidly, the execution layer has remained largely static — designed under assumptions of stable hardware, predictable memory availability, and controlled runtime conditions.

In practice, AI workloads operate on heterogeneous, shared, and dynamically constrained hardware. GPUs are not always exclusive or stable. Memory pressure fluctuates. Execution conditions change continuously. Under these conditions, traditional runtimes exhibit common failure modes:

- ⚠️ Out-of-memory errors

- 🔁 Execution policy thrashing

- ⏱️ Latency jitter

- 🧯 Fragile and reactive fallback behavior

These failures are not computational errors.

They are execution decision failures.

Atenia Engine addresses this gap by treating execution itself as a first-class adaptive system. Rather than relying on static heuristics or offline optimization, Atenia continuously observes real runtime conditions and reasons about how execution should proceed.

Execution decisions — such as placement, scheduling, aggressiveness, and fallback — are adapted dynamically based on current hardware behavior and accumulated execution experience, while computational semantics remain strictly unchanged.

The core principle of Atenia Engine is a strict separation between what is computed and how execution is carried out. Model definitions, numerical operations, and learning dynamics are treated as immutable.

All adaptation occurs exclusively at the execution level, allowing the system to respond to instability, uncertainty, and resource variability without introducing numerical drift or altering model correctness.

To achieve this, Atenia introduces an execution intelligence layer that continuously profiles runtime behavior, evaluates execution risk, and selects execution policies that prioritize stability and continuity over short-term optimization.

The engine incorporates mechanisms to:

- 🧠 Prevent policy oscillation

- 🎚️ Damp overreaction to noisy runtime signals

- 🧭 Regulate execution transitions under uncertainty

As a result, execution behavior converges toward stable strategies even under fluctuating and imperfect runtime conditions.

A distinctive feature of Atenia Engine is its ability to learn from execution experience — without machine learning. The system maintains persistent execution memory that captures execution-relevant outcomes across runs.

This memory enables Atenia to:

- ♻️ Improve execution decisions over time

- 🎯 Reduce unnecessary fallback and defensive behavior

- 🔒 Preserve deterministic and reproducible computation

Learning emerges from structured execution experience, not from model retraining or statistical inference.

Atenia Engine is designed to operate across heterogeneous environments and does not depend on vendor-specific assumptions or proprietary execution stacks. By treating hardware — CPU, GPU, memory, and storage — as dynamic decision variables rather than fixed execution targets, the engine remains robust under real-world deployment conditions where ideal hardware assumptions rarely hold.

This whitepaper presents the architectural principles and execution philosophy behind Atenia Engine. Detailed experimental validation, formal evaluation, and empirical results are provided in the accompanying scientific paper.

Together, they demonstrate that execution intelligence can be treated as a foundational system concern, enabling AI runtimes that remain stable, resilient, and continuous under real-world hardware uncertainty.

🔍 The Real Problem: Execution Fails in Practice

Modern AI runtimes are commonly designed under implicit assumptions about the execution environment. These assumptions include stable hardware availability, predictable memory capacity, consistent performance characteristics, and limited external interference.

While such conditions may hold in controlled benchmarking environments, they rarely reflect how AI systems are deployed and operated in practice.

In real-world scenarios, execution takes place on heterogeneous and shared infrastructure. GPUs are frequently contended by multiple workloads. Memory availability fluctuates over time. System-level interference introduces variability that cannot be fully predicted at design time.

Even in nominally dedicated environments, transient effects such as:

- 🧩 Memory fragmentation

- 🧱 Driver and runtime behavior

- 🌡️ Thermal constraints

- 🔄 Background system activity

can significantly alter effective execution conditions while execution is already in progress.

This mismatch between assumed hardware behavior and actual runtime conditions is the root cause of many execution failures observed in modern AI systems.

- ⚠️ Out-of-memory errors often occur not because total resources are insufficient, but because execution decisions fail to anticipate transient pressure or overlapping resource usage.

- 🔁 Execution policy thrashing emerges when runtimes react aggressively to noisy signals, repeatedly switching strategies without allowing sufficient time for stabilization.

- ⏱️ Latency jitter further disrupts scheduling and resource planning, amplifying instability over time.

Importantly, these failures do not indicate flaws in model computation. The mathematical operations remain correct. Model semantics are preserved.

What fails is the execution layer’s ability to reason about hardware as it exists in reality, rather than as it is assumed during offline optimization or system design.

Traditional runtimes tend to respond reactively — after a failure has already occurred — or rely on overly conservative safeguards that trade efficiency for safety, often at the cost of execution continuity.

A fundamental limitation of existing execution models is their reliance on static heuristics and precomputed decisions. Execution strategies are frequently derived from:

- 📊 Offline benchmarks

- 📐 Fixed thresholds

- 🧮 Simplified hardware models

These approaches cannot adapt when conditions change mid-execution. When real-world variability violates their assumptions, the system either collapses under unexpected pressure or overcorrects in ways that introduce oscillation and instability.

Recognizing that real hardware is inherently dynamic is essential. Robust AI systems must treat variability, contention, and uncertainty as normal operating conditions, not as exceptional cases.

Execution failures in practice are not edge cases to be patched around. They are symptoms of a deeper architectural gap between static execution models and dynamic runtime reality.

Atenia Engine is built on the premise that this gap cannot be closed through incremental safeguards alone. Addressing execution failures in practice requires rethinking execution as an adaptive, reasoning process — one that continuously aligns execution behavior with the hardware conditions that actually exist, not the ones assumed at design time.

⚠️ Why Existing Runtimes Break Under Reality

Most modern AI runtimes are highly sophisticated systems, optimized through years of engineering effort and research. They excel at executing computation efficiently under well-defined and controlled conditions, and have enabled remarkable advances in model scale and performance.

However, their design assumptions reflect a world where execution conditions are largely predictable and stable — a world that rarely exists in real production environments.

A common characteristic of existing runtimes is their reliance on static or locally optimized execution decisions. Key execution choices such as:

- 📍 Placement

- 🧵 Scheduling

- 💾 Memory allocation

- ⚙️ Kernel selection

are typically derived from compile-time analysis, offline benchmarking, or fixed heuristics.

While some limited runtime adaptivity may exist, it is usually constrained to narrow scopes and does not form a coherent, system-level model of execution behavior over time.

This design approach works well when hardware behavior closely matches optimization assumptions. But when conditions deviate — due to resource contention, fluctuating memory pressure, or shared infrastructure — execution decisions that were optimal in theory become fragile in practice.

Because these decisions are not continuously re-evaluated in light of actual runtime behavior, the system lacks the ability to adjust gracefully as conditions evolve.

Another fundamental limitation is the absence of explicit execution stability mechanisms. Many runtimes react immediately to runtime signals without sufficient temporal context, mistaking short-lived fluctuations for meaningful trends.

This often results in:

- 🔁 Execution policy thrashing

- 📉 Repeated strategy switching

- 📊 Overreaction to noisy observations

Rather than improving execution, such behavior amplifies instability and degrades overall system reliability.

Furthermore, most runtimes do not retain execution experience across runs in a structured and actionable form.

Each execution is largely treated as an isolated event, even when similar workloads are executed repeatedly under comparable conditions.

As a result:

- ♻️ The system cannot learn from prior execution outcomes

- 🚫 The same failure modes are encountered repeatedly

- 🛑 Unnecessary defensive behavior persists indefinitely

Importantly, these limitations do not stem from flawed computation models or inadequate optimization techniques.

They arise from treating execution as a secondary concern — an implementation detail rather than a system that must reason, adapt, and stabilize itself over time.

Existing runtimes are highly effective within their intended design scope. But that scope often excludes execution stability under dynamic and uncertain conditions.

Addressing these challenges does not require replacing existing frameworks or compilers.

It requires complementing them with an execution-centric perspective — one that acknowledges hardware variability as a first-class reality and equips the execution layer with the ability to:

- 👁️ Observe real runtime conditions

- 🧠 Reason about execution risk and stability

- 🔁 Adapt continuously over time

Without such a perspective, even the most advanced runtimes remain vulnerable when idealized assumptions collide with real-world execution.

🧠 Atenia Engine in One Idea

Execution that reasons — without touching semantics

At its core, Atenia Engine is built around a single guiding idea: execution should be able to reason about itself, while computation remains untouched.

This principle defines both the scope and the limits of the system. Atenia does not change what a model computes, how it learns, or how numerical results are produced.

Instead, it focuses exclusively on how execution decisions are made under real, dynamic hardware conditions.

In traditional AI systems, execution is often treated as a passive consequence of computation. Once a model is defined, execution strategies are largely fixed or derived from static rules, with limited capacity to adapt when conditions change.

Atenia challenges this view by treating execution as an active decision-making process — one that:

- 👁️ Continuously observes runtime conditions

- 🧠 Evaluates execution risk and stability

- 🎛 Selects execution strategies accordingly

The key to this approach is a strict separation between computational semantics and execution behavior.

Computational semantics define the mathematical meaning of the model:

- 🔢 Operations and precision

- 📐 Learning dynamics

- 📊 Numerical outputs

These remain deterministic and invariant.

Execution behavior, on the other hand, determines:

- 📍 Where computation runs

- ⏱ When it runs

- 🧩 How it is mapped onto available hardware resources

Atenia allows execution behavior to adapt freely, while enforcing immutability at the semantic level.

By reasoning at the execution layer, Atenia can respond to hardware variability without introducing numerical drift or altering model correctness.

Decisions such as:

- 🎚 Adjusting execution aggressiveness

- 🔀 Shifting placement across resources

- 🛡 Activating or suppressing fallback strategies

are made transparently, while preserving identical computational outcomes.

This design enables a form of adaptation that is fundamentally different from traditional optimization.

Rather than chasing peak performance under assumed conditions, Atenia prioritizes:

- 🧱 Sustained execution continuity

- 🔮 Stability under uncertainty

- 📈 Convergence toward reliable execution behavior over time

Execution strategies are evaluated not only by immediate efficiency, but by their ability to remain stable as conditions fluctuate.

In essence, Atenia Engine treats execution as a control system rather than a fixed pipeline.

It observes. It reasons. It adapts.

Computation remains pure, deterministic, and unchanged.

This single idea underpins the entire architecture and distinguishes Atenia from systems that conflate execution decisions with computational semantics.

🧠 Execution Intelligence: The Missing Layer

Most AI systems are built around two clearly defined concerns: computation and hardware.

Computation specifies what should be calculated. Hardware provides the resources on which those calculations run.

Between these two layers, execution is often treated as an implicit mechanism — a set of rules that translate computation into hardware activity without independent reasoning or long-term awareness.

Execution intelligence fills this missing layer.

It represents the capability of a system to:

- 👁️ Observe its own execution behavior

- 🧠 Reason about real runtime conditions

- 🎛 Make informed decisions about how execution should proceed

Rather than acting as a passive conduit between computation and hardware, execution intelligence operates as an active control layer that continuously aligns execution behavior with real-world conditions.

In the absence of execution intelligence, runtimes rely on static assumptions and localized optimizations.

Decisions such as:

- 📍 Placement

- 🧵 Scheduling

- 💾 Resource utilization

are typically made without a global understanding of:

- ⚠️ Execution stability

- 📉 Risk accumulation

- 🔁 Historical execution outcomes

When conditions change, these systems can only react locally or defensively — often after instability has already emerged.

Execution intelligence introduces a fundamentally different perspective.

It treats execution as a dynamic process that unfolds over time, where decisions have consequences beyond a single operation or kernel launch.

By observing runtime signals and correlating them with execution outcomes, an intelligent execution layer can:

- 🧭 Distinguish transient fluctuations from meaningful shifts

- 📊 Understand execution context evolution

- 🎯 Select strategies with long-term stability in mind

Crucially, execution intelligence does not require altering computational semantics or embedding learning mechanisms into the model itself.

It operates entirely at the execution level, reasoning about:

- ⚙️ Hardware behavior

- ⚠️ Execution risk

- 🧱 Stability patterns

This allows the system to adapt execution strategies without interfering with model correctness or reproducibility.

A defining characteristic of execution intelligence is its temporal awareness.

Rather than responding instantly to every signal, it evaluates:

- ⏳ Trends over time

- 🎚 Confidence in observed signals

- 🧠 Accumulated execution experience

This enables controlled adaptation, prevents oscillatory behavior, and supports convergence toward stable execution strategies.

By introducing execution intelligence as a first-class system layer, Atenia Engine addresses a structural gap in current AI runtimes.

It does not replace existing frameworks or optimizers. Instead, it complements them by providing the missing reasoning layer that allows execution to remain:

- 🧱 Coherent

- 🔒 Stable

- 🔮 Resilient

under real-world execution conditions.

🧱 Stability First: Why Thrashing Is the Enemy

In adaptive execution systems, instability is often more damaging than inefficiency. While suboptimal execution may reduce performance, unstable execution undermines reliability, predictability, and long-term progress.

Among the most harmful forms of instability is execution thrashing — the rapid and repeated switching of execution strategies in response to noisy or transient signals.

Thrashing occurs when a runtime reacts too aggressively to short-lived changes in execution conditions.

Minor fluctuations in:

- 📉 Resource availability

- ⏱️ Latency

- 💾 Memory pressure

are misinterpreted as meaningful shifts, triggering immediate policy changes.

Instead of converging toward a stable execution strategy, the system enters a feedback loop where adaptation itself becomes the source of instability.

The consequences of thrashing extend far beyond performance degradation.

- 🔄 Frequent policy switches introduce execution overhead

- ⚠️ Resource contention increases

- 📊 Latency variability is amplified

More critically, thrashing prevents the accumulation of reliable execution experience.

When execution strategies change continuously, the runtime cannot determine which decisions are genuinely effective, leading to repeated exposure to the same failure modes.

Many existing runtimes implicitly prioritize responsiveness over stability.

While rapid reaction may seem desirable, unregulated responsiveness in noisy environments often produces the opposite effect:

- 📉 Execution becomes erratic

- 🧠 Confidence in decisions erodes

- 🛑 Fallback mechanisms trigger unnecessarily

In such systems,

adaptation does not lead to improvement.

It leads to oscillation.

Atenia Engine adopts a fundamentally different stance:

Stability is the prerequisite for meaningful adaptation.

Rather than optimizing for immediate reaction, Atenia evaluates execution behavior over time. Execution decisions are regulated through:

- ⏳ Temporal awareness

- 🎚 Confidence assessment

- 🧠 Memory-based smoothing

ensuring that strategy changes reflect sustained trends rather than transient noise.

By treating stability as a first-class objective, Atenia prevents execution from overcorrecting under uncertainty.

Policies are given sufficient time to demonstrate their effectiveness, and transitions are introduced deliberately rather than impulsively.

This controlled approach allows execution behavior to converge toward stable strategies even when runtime signals remain imperfect.

Prioritizing stability does not mean abandoning adaptation or performance awareness.

It means recognizing that reliable execution emerges from controlled, coherent decision-making, not from constant adjustment.

By eliminating thrashing as a systemic behavior, Atenia enables execution strategies that are:

- 🧱 Robust

- 🔒 Predictable

- 🧠 Capable of learning from experience

under real-world execution conditions.

🧪 Safe Adaptation via Virtual Execution

Adapting execution strategies in real time carries inherent risk. While exploration is necessary to improve execution behavior, blindly testing new strategies on physical hardware can lead to instability, resource exhaustion, or outright execution failure.

In many systems, this risk forces an undesirable trade-off:

- 🛑 Limit adaptation to safe but overly conservative strategies

- ⚠️ Accept disruptive execution during experimentation

Both options constrain progress.

Atenia Engine avoids this trade-off by separating decision evaluation from physical execution.

Rather than immediately applying new execution strategies to real hardware, Atenia first evaluates them within a virtual execution context.

This virtual execution does not emulate hardware at the instruction level. Instead, it models execution-relevant behavior, such as:

- 💾 Resource pressure

- ⚠️ Feasibility constraints

- 🧱 Execution risk boundaries

The purpose of virtual execution is not to predict exact performance metrics.

Its role is to answer a simpler — and more critical — question:

Is this execution strategy safe to try?

By estimating whether a candidate strategy is likely to:

- 🚫 Exceed memory constraints

- 🔁 Introduce excessive contention

- ⚠️ Destabilize execution

Atenia filters out unsafe options before they ever reach physical hardware.

This approach allows the engine to explore alternative execution paths without exposing real systems to unnecessary risk.

Unsafe or unstable strategies are discarded early. Viable candidates are passed to real execution under controlled conditions.

As a result, adaptation becomes proactive rather than reactive — preventing failures instead of responding to them after the fact.

Virtual execution also enables Atenia to reason about execution transitions.

When shifting between strategies, the engine evaluates not only the target strategy, but also the stability implications of the transition itself.

This ensures that execution changes are introduced gradually and coherently, avoiding abrupt shifts that could destabilize in-flight computation.

For engineers, the practical impact is straightforward:

Adaptation no longer requires sacrificing reliability.

Execution strategies can be evaluated, refined, and selected with an explicit safety barrier between decision-making and hardware.

The system gains the freedom to adapt intelligently while maintaining execution continuity, even under uncertain and fluctuating runtime conditions.

By incorporating virtual execution as a planning layer, Atenia transforms adaptation from a risky experiment into a controlled engineering process — one that balances exploration with safety and aligns execution behavior with real-world constraints.

🧠 Learning from Execution (Without ML)

Most adaptive systems equate learning with machine learning: models, parameters, training cycles, and statistical optimization.

Atenia Engine deliberately takes a different path. It improves execution behavior over time without introducing machine learning into the runtime and without modifying computational semantics.

Learning in Atenia occurs entirely at the execution level.

The system observes how execution decisions behave under real conditions and retains structured knowledge about their outcomes.

This knowledge is stored as execution experience — not as model parameters, not as learned weights, and not as opaque statistical state.

Over time, the engine uses this experience to inform future execution decisions, favoring strategies that have demonstrated stability and reliability under similar conditions.

Execution experience is captured in a compact and actionable form.

Rather than storing raw metrics or full execution traces, Atenia records distilled information about:

- 🧭 Execution context

- 🎛 Selected execution strategies

- 📊 Observed outcomes and stability signals

This allows the system to recognize recurring execution scenarios and recall which strategies were:

- 🧱 Stable

- ⚠️ Risky

- 🚫 Ineffective

in the past.

Because this learning is experience-driven rather than statistical, it remains:

- 🔒 Deterministic

- 👁️ Transparent

- 📘 Fully interpretable

There is:

- 🚫 No retraining phase

- 🚫 No convergence uncertainty

- 🚫 No hidden state that alters computation

Improvements emerge through better execution decisions, not through changes in how computation is performed.

When the same workload is executed again under similar conditions, Atenia can converge immediately toward stable execution behavior instead of repeating exploratory or defensive actions.

A practical consequence of this approach is the reduction of unnecessary fallback and overconservative behavior.

Cold executions may require defensive strategies while execution confidence is low.

Warm executions, informed by prior experience, can proceed directly with stable execution strategies, avoiding repeated risk mitigation when it is no longer needed.

By separating execution learning from model learning, Atenia avoids the complexity, opacity, and fragility often associated with embedding machine learning into system control paths.

Learning remains:

- 📐 Bounded

- 👁️ Interpretable

- 🧱 Aligned with execution stability

rather than optimization objectives.

This form of learning transforms execution from a stateless process into an evolving system.

Without relying on machine learning, Atenia demonstrates that execution can improve through experience alone — becoming more stable, predictable, and resilient over time, while preserving deterministic computation and reproducibility.

✅ Proof, Not Promises

Atenia Engine makes explicit claims about execution stability, resilience, and learning from experience.

These claims are not based on projected benchmarks, synthetic scores, or aspirational performance targets. They are grounded in observable execution behavior, validated through executable tests.

Rather than presenting isolated metrics or peak performance numbers, Atenia focuses on how execution behaves under realistic and adverse conditions.

The objective is not to demonstrate how fast execution can be under ideal assumptions, but how reliably it continues when those assumptions break.

Every core property described in this whitepaper is backed by tests that exercise the execution engine directly.

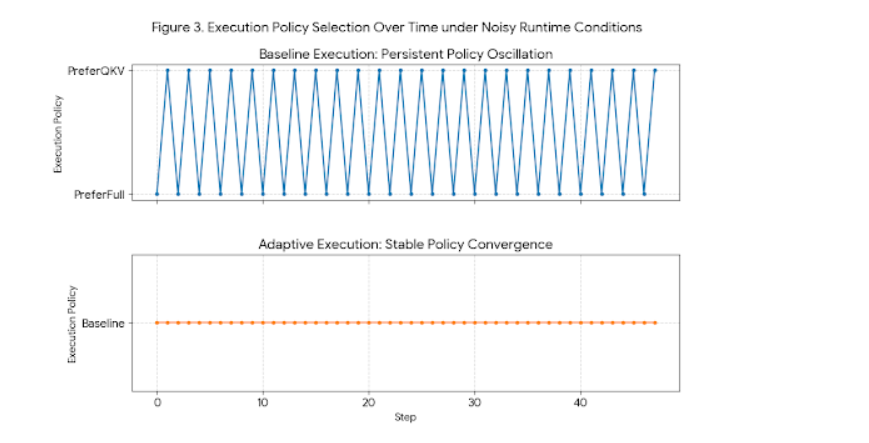

🧱 Stability under noisy runtime conditions

Claim: Execution converges toward stable strategies instead of oscillating in response to transient signals.

Evidence: Test results shown below.

🔒 Semantic Correctness Under Adaptation

Execution policies may change dynamically in response to runtime conditions, but computational semantics remain strictly unchanged.

No numerical drift is introduced, and computational results remain identical across adaptive executions, demonstrating that adaptation occurs exclusively at the execution level.

🛡 Predictive Fallback and Execution Continuity

Execution risk is detected early, before failures occur, allowing mitigation to be applied proactively.

As a result, execution continues smoothly without aborts or hard failures, preserving execution continuity even under elevated and fluctuating runtime pressure.

Execution policy selection over time under noisy runtime conditions.

Baseline execution exhibits persistent policy oscillation, frequently switching between execution strategies under runtime noise and transient signals.

In contrast, Atenia’s adaptive execution converges toward a stable policy and maintains it throughout execution, effectively eliminating policy thrashing.

♻️ Learning Across Executions (Warm vs. Cold)

Prior execution experience significantly reduces unnecessary fallback behavior and accelerates convergence toward stable execution strategies.

Cold executions may require defensive adaptation, while warm executions leverage accumulated experience to proceed directly with stable and confident execution behavior.

| Phase | Iterations | Fallback Count | Policy Switches | Selected Policy |

| Cold | 24 | 2 | 1 | Baseline |

| Warm | 24 | 0 | 0 | PreferQKV |

These behaviors are not inferred; they are observed.

Tests are designed to isolate execution phenomena such as policy thrashing, risk accumulation, and stabilization dynamics, allowing their impact to be evaluated directly rather than indirectly through aggregate performance metrics.

Reproducibility is a deliberate design constraint.

All validation is performed through executable tests rather than opaque evaluation pipelines. There are:

- 🚫 No hidden benchmarks

- 🚫 No proprietary datasets

- 🚫 No unpublished tuning steps

Execution behavior can be verified directly by running the test suite:

cargo test

If the tests pass, the execution engine is alive.

This approach reflects a broader philosophy:

Trust should be earned through behavior, not claims.

By grounding its assertions in reproducible execution outcomes, Atenia Engine shifts validation from promise-driven narratives to observable system behavior.

Detailed experimental methodology, quantitative analysis, and formal evaluation are provided in the accompanying scientific paper.

This whitepaper presents the evidence at a conceptual level, while the paper establishes the full experimental rigor behind each claim.

📄 How This Relates to the Scientific Paper

This whitepaper is intentionally not a substitute for the scientific publication.

Its purpose is to provide architectural context, execution philosophy, and high-level evidence for Atenia Engine, without the formal structure and density required for academic review.

The accompanying scientific paper presents the full technical contribution in a rigorous, citable form.

It includes:

- 📘 Detailed motivation

- 🧠 Formal system description

- 🧪 Experimental methodology

- 📊 Quantitative evaluation

- 📚 Discussion of limitations and related work

All experimental claims summarized in this whitepaper are derived directly from that work.

The relationship between the two documents is explicit and deliberate:

- This whitepaper explains what Atenia Engine is, why it exists, and how it behaves in practice.

- The scientific paper demonstrates why those claims are valid, how they were evaluated, and under which conditions they hold.

No experimental results are introduced in the whitepaper that are not fully backed by the scientific paper.

Likewise, no technical rigor is removed or simplified in translation — only the level of detail and presentation differs to match audience and intent.

Readers seeking formal definitions, experimental setups, quantitative metrics, and methodological depth are encouraged to consult the scientific paper directly:

Atenia Engine: Hardware-Adaptive Execution Intelligence for Stable and Resilient AI Runtime Systems

Together, these documents serve complementary roles.

The whitepaper provides an accessible but technically faithful overview of execution intelligence in Atenia Engine, while the scientific paper establishes the formal foundation and reproducible evidence behind every claim.

🧭 Scope, Limits, and Roadmap (APX)

Atenia Engine is developed with a deliberately constrained and explicit scope.

Its purpose is not to redefine model architectures, training algorithms, or numerical optimization techniques, but to address a specific and critical gap: execution stability and resilience under real, dynamic hardware conditions.

📦 Scope — Current State

As of the current implementation, Atenia Engine provides:

- 🧠 Execution intelligence as a dedicated system layer

- 👁️ Continuous runtime observation and profiling

- 🎛 Adaptive execution policy selection under noisy conditions

- 🧱 Explicit prevention of execution thrashing and oscillation

- 🛡 Predictive fallback mechanisms to preserve execution continuity

- ♻️ Persistent execution memory enabling learning across executions

- 🔒 Adaptation without altering computational semantics or numerical correctness

All of these capabilities are fully implemented, tested, and operational.

Atenia Engine does not rely on simulated claims or conceptual placeholders for these features. They are part of the active runtime system.

🚧 Limits — Intentional Boundaries

Atenia Engine does not attempt to optimize peak performance under idealized conditions, nor does it guarantee maximal throughput or minimal latency in isolation.

In some scenarios, the system deliberately trades short-term efficiency for long-term execution stability and continuity.

The current execution intelligence model focuses on single-node execution contexts and reasons primarily about runtime signals such as:

- 💾 Memory pressure

- ⏱️ Latency behavior

- 🔁 Policy stability

While the architecture is extensible, distributed execution, multi-node coordination, and network-level variability are not yet within the active execution scope.

These limits are intentional. Atenia prioritizes predictable, stable, and survivable execution over aggressive optimization strategies that assume ideal hardware behavior.

🧩 Roadmap Status — APX

The development of Atenia Engine follows a structured APX progression based on concrete implementation milestones rather than conceptual phases.

APX-01 → APX-06

Foundational execution control,

runtime observation,

and initial adaptive mechanisms.

APX-07 → APX-10

Execution memory,

stabilization logic,

and controlled policy adaptation

under noisy runtime conditions.

APX-11 → APX-12.14 — COMPLETED

Adaptive GPU profiling,

execution intelligence consolidation,

virtual execution modeling,

predictive fallback,

execution continuity,

and learning across executions.

At the time of writing, APX-12 is fully completed.

Atenia Engine has transitioned from a system that merely executes workloads to one that reasons about execution behavior and adapts coherently under real-world conditions.

Future APX phases are not active development targets for this document and are intentionally left unspecified.

This whitepaper and the accompanying scientific paper describe a system whose core execution intelligence is already implemented, validated, and operational.

🏁 Conclusion: Execution as a First-Class System

The evolution of AI systems has been driven primarily by advances in models, training algorithms, and numerical optimization.

In contrast, execution has long been treated as a secondary concern — an implementation detail assumed to behave predictably once computation is defined.

This assumption no longer holds in real-world environments.

Atenia Engine demonstrates that many of the failures observed in modern AI systems are not computational in nature, but executional.

Instability, thrashing, aborts, and loss of execution continuity emerge when execution is forced to operate under static assumptions in dynamic and uncertain hardware conditions.

Addressing these failures requires elevating execution from a passive mechanism to an adaptive system in its own right.

By introducing execution intelligence as a first-class layer, Atenia Engine reframes execution as a process that:

- 👁️ Observes runtime behavior

- 🧠 Reasons about stability and risk

- 🔁 Adapts coherently over time

Crucially, this adaptation occurs without modifying computational semantics.

Model behavior remains deterministic and reproducible, while execution decisions evolve to reflect real hardware behavior rather than idealized assumptions.

The system presented here prioritizes stability, safety, and continuity over aggressive optimization.

Through:

- 🧱 Controlled adaptation

- 🛡 Predictive fallback

- 🧪 Virtual execution modeling

- ♻️ Persistent execution memory

Atenia Engine shows that execution can improve through experience without relying on machine learning or opaque control mechanisms.

Stability becomes the foundation upon which meaningful adaptation is built.

This whitepaper has focused on the architectural principles and observable behavior of Atenia Engine.

The accompanying scientific paper provides formal validation and experimental evidence for the claims summarized here.

Together, they demonstrate that execution intelligence is not an optional optimization, but a necessary system capability for operating AI workloads under real-world conditions.

Treating execution as a first-class system is not a refinement of existing approaches —

It is a shift in perspective.

Atenia Engine offers a concrete demonstration of how this shift enables AI runtimes that remain reliable, resilient, and coherent when ideal assumptions inevitably break.